NEW

Dubbing 3.0 is hereSmarter translations, cleaner audio, and more natural multi-speaker dubbing.Try it nowThe most intelligent video AI platform

AI video APIs powering thousands of developers and enterprises. From audiovisual search and understanding to the leading video translation and content editing tools.

Translate video and audio content with best-in-class quality, control, and scale.

Trusted by 1500+ leading companies

Built for developers

Built-in flexibility

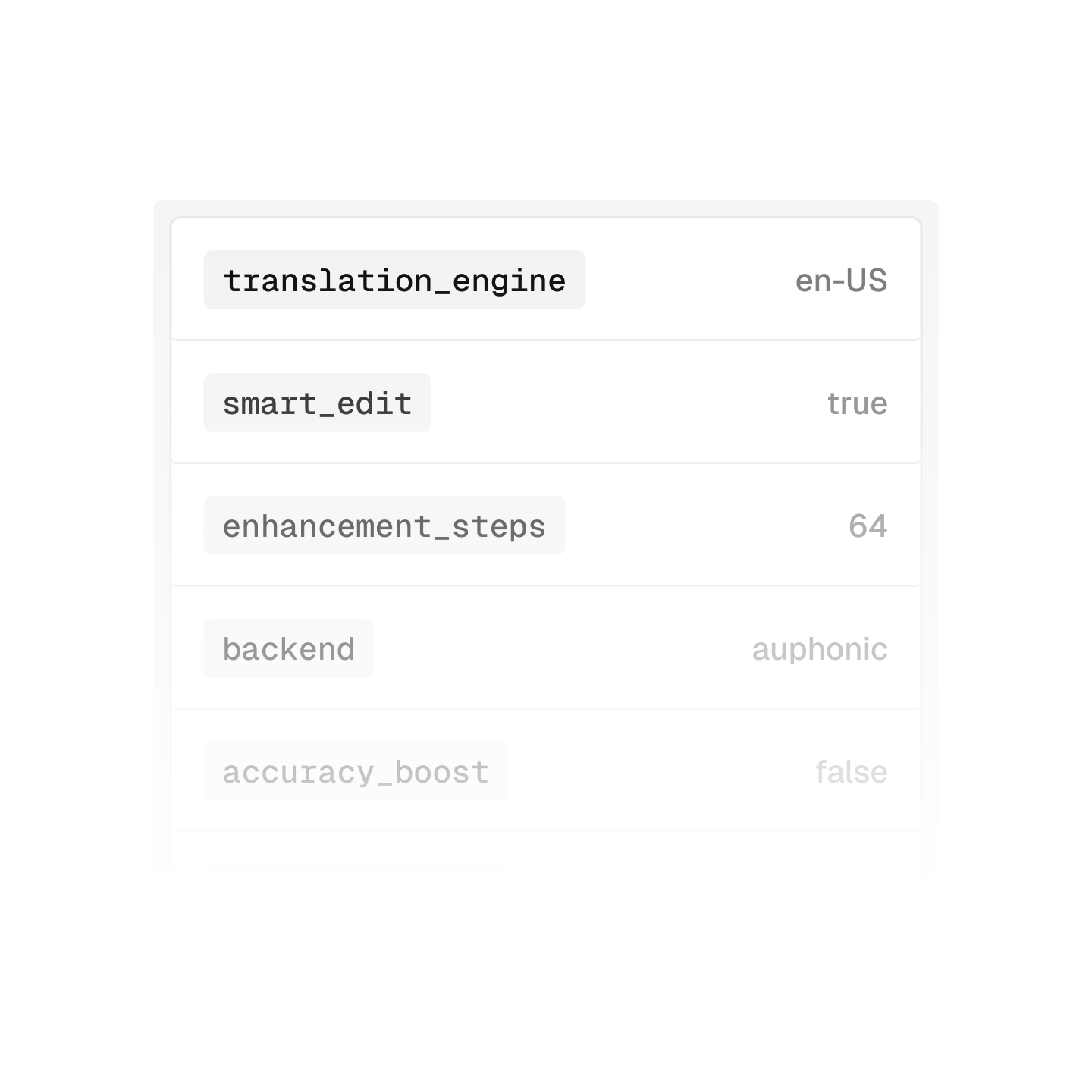

Access the right levers over cost, quality, speed, and functionality based on your use case.

Extreme scale

Automatically scale API requests to process hundreds of millions of media files per day.

Large solution library

A single platform to handle many use cases, reducing third-party overhead.

Simple integration

Scale API requests to process hundreds of millions of media files per day.

Built-in flexibility

Access the right levers over cost, quality, speed, and functionality based on your use case.

Extreme scale

Automatically scale API requests to process hundreds of millions of media files per day.

Large solution library

A single platform to handle many use cases, reducing third-party overhead.

Simple integration

Scale API requests to process hundreds of millions of media files per day.

Powering a variety of use cases

Creative and Social Platforms

Ship world-class AI features with production-grade APIs and modular infrastructure designed for video.

Learn More →

Model Developers

Process large video datasets with reliable APIs for collecting, filtering, and annotating internet video.

Learn More →

Media Companies

Process existing media libraries with high-quality APIs that can analyze, repurpose, and reformat content.

Learn More →

Used by Industry Leaders

Built for teams at the bleeding edge of video AI

Kaiber uses Sieve for our production AI video gen workloads. Our end-to-end shipping speed would be notably slower without Sieve, as they handle so many common, yet complex requirements out of the box. In addition, their team is exceptionally communicative and have always worked quickly to address our feedback.

Eric Gao, CTO

Zight uses Sieve to power all our production AI capabilities. Our team loves the fact that Sieve is one roof where all our video AI features can live under. It allows us to move at unparalleled speed from prototype → production.

Phin Hochart, Head of Product

Sieve helped us scale large data workloads and train state of the art generative models. They are super responsive to custom requests and were a great partner to work with.

Naeem Talukder, CEO

We chose Sieve because it worked seamlessly out of the box—just input a video, and it delivered the exact format we needed with excellent quality. The developer experience was also exceptional, making it a clear choice for us.

Archie Edwards, Senior Software Engineer

Built for scale

Enterprise SLAs

Uptime & processing SLAs for ad-hoc, large batch, and production use cases.

Dedicated support

Tailored onboarding, customization, and support from our team.

Volume discounts

Significant discounts that enable enterprise scale.

Scalable API

Built to process millions of hours of video at any given moment.

Secure

End-to-end encryption, custom data retention, and SOC 2 Type 2 secured.